What does it mean to run an application in the cloud? What types of clouds are there, and what responsibilities can they take away from me? Or conversely, what does it mean not to go to the cloud? To clarify these questions, we first need to identify the responsibilities. The next step is to show where the cloud can support us.

What are the infrastructure layers?

Servers, hard disks, and networks form the lowest level of infrastructure. In fact, under the hood, there is always real sheet metal: hardware to touch, computers in cabinets equipped with hard disks and network cables. Often, hard disks are no longer installed directly but are operated in their cabinets and linked to the computers via dedicated networks. The physical machine can thus be assigned precisely the capacity needed there. And this can be done without moving the actual hardware. In this way, not moving the hardware saves working time and thus cash.Cost distribution through efficient use of space and resources

For the operation of hardware, there are cost advantages if the hardware has standard dimensions. Standardized devices can be easily stacked, and identical parts are easy to replace. Optimal floor space and room accommodate as much hardware as possible in the smallest possible space. Here, efficient utilization of floor space and content saves money. There are now links between cabinets with hard disks and cabinets with CPUs. However, this cost-efficient hardware is then too large for running individual applications. Virtualization of machines helps here, and the hardware can be utilized efficiently by dividing the physical hardware into many small virtual machines. With appropriate utilization, there is little wastage. As a result, we are reducing costs and allocating resources from free capacity when needed.Further cost savings through virtual machines

The use of virtual machines requires an installed operating system. Here, operational tasks include the provision of patches and security updates. Viewing log files is an essential measure for detecting unusual activity. As a result, the early detection of anomalies helps identify hacker attacks or quickly detect problems with updates. Occasionally, an operating system needs significant upgrades. In this case, a high degree of automation further reduces cost. Virtual machines come in many different sizes. They are a means of cutting the physical hardware into manageable pieces. Consequently, the cost of real hardware is thus evenly distributed among all users. Distribute operational costs to all participants for hardware, floor space, electricity, and air conditioning. A win-win situation for all involved. The data center operator has uniform hardware and can simplify operational processes. In addition, users of the hardware benefit from comparatively lower operating costs.Process virtualization creates further savings potential

However, the potential for cost optimization is not yet exhausted. By overprovisioning, make more virtual hardware available than is physically available. This method is ideal for applications that spend much of their time waiting. For many compute-intensive applications, however, this makes little sense. An alternative to overprovisioning is the use of middleware. Overprovisioning refers to runtime environments for applications. Examples are application servers or container engines. Equipping Virtual machines with an operating system is mandatory. But it occupies an area of hardware capacity. These capacities are then no longer available to the applications. As virtual machines become smaller and smaller, the number of waste increases. So it makes no sense to shrink virtual machines further. To utilize the capacity of the virtual machines as efficiently as possible and not leave any computing capacity unused, optimal utilization for each machine becomes obligatory. Application servers and container engines help with process virtualization. The goal is to run as many applications as possible side by side on the virtual machine without conflicts. Data and applications contain the business logic and are the most critical users of hardware resources. Many applications side by side helps optimize the virtual machine’s utilization. The application usually needs configurations and requires proper linkage with other applications. Running applications involves not only installation but also regular care and maintenance. This care includes viewing logs, providing software updates, and fixing security vulnerabilities.Applications and Lambda functions

Lambda functions are currently at the top of the list. Practically, this is the execution as needed of small program snippets in the context of particular applications. The operation of rarely used functions is a typical example. Often, lambdas and containers refer to serverless computing. They are also ultimately operated on real hardware servers, but the server plays no role in the billing model. The computing power, such as CPU minutes or executions, is sold above all. Consequently, this billing model allows the inexpensive operation of minor applications.

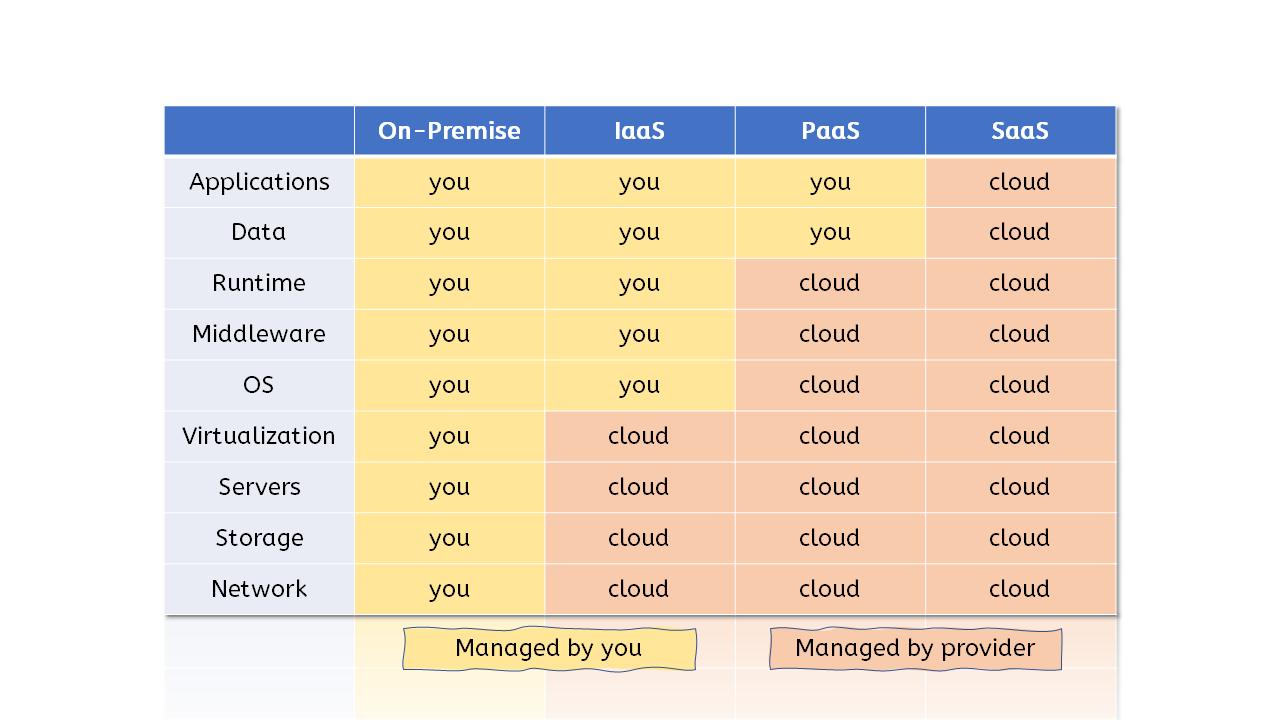

On-Premise

If you run your own data center, you are responsible for everything from the hardware to the application. This classic model is called on-premise.

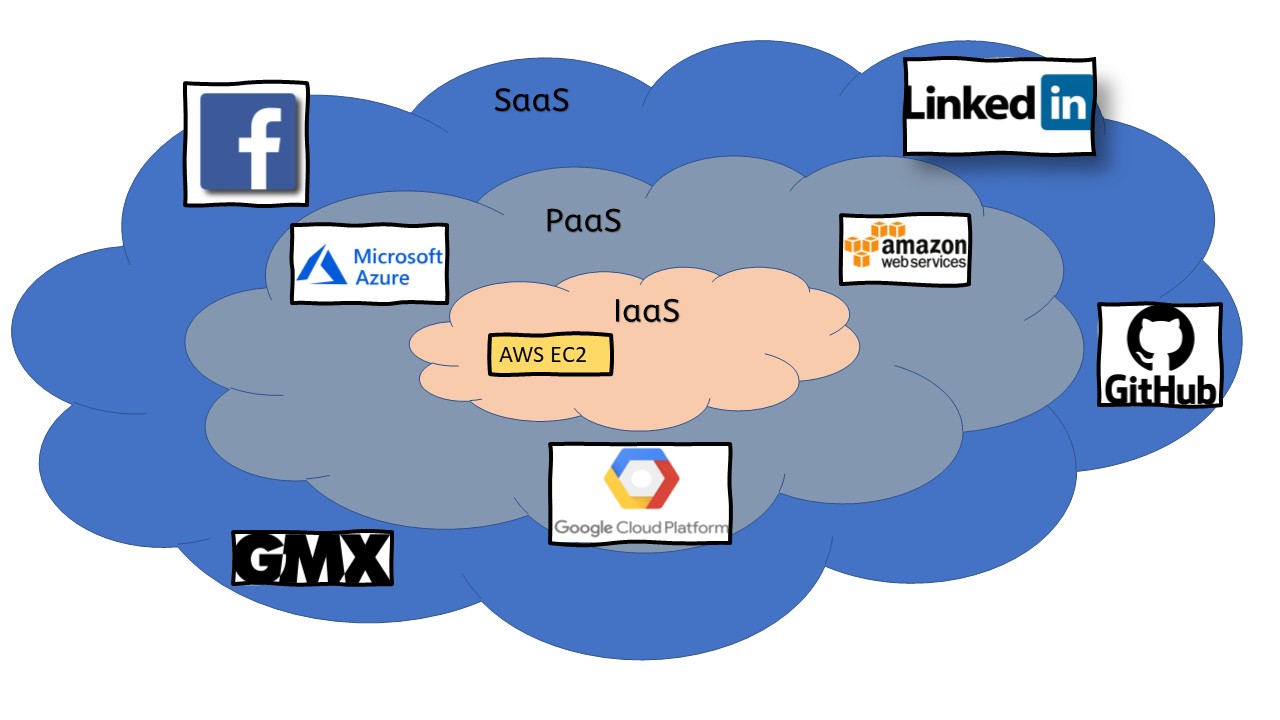

Infrastructure as a Service

This operation transfers more parts of the responsibility to a service provider. The service provider is also called a cloud provider. The responsibility handed over includes the operation of mainboards with CPUs, hard disks, and network infrastructure. On this basis, the provider offers virtual machines for individual use. As a result, the provider looks after the hardware’s care and maintenance and ensures the virtual machine’s permanent operation. The user is responsible for the contents of the virtual machine, and the user’s responsibility starts with the operating system and ends with the application.Platform as a service

Here, the provider takes on additional responsibilities, including the operating system, middleware, or runtimes. Application servers or the Kubernetes container platform are the best-known representatives of this type of service. The user finds a complete runtime environment and is only responsible for the data and applications.Software as a Service

In this model, the provider takes over the complete management, including the application. Some examples of fully managed applications are project management tools, wikis, content management systems, or similar. The provider ensures the operation and takes care of the backup of essential data and the performance. The user receives a fully managed service and is not concerned with the operational aspects.Conclusion

Upon further consideration, help find details for optimization can. For example, there may be advantages to clustering equal-sized virtual machines on hardware. Some virtualization implementations may penalize larger virtual machines in time allocation. As a fact, such a machine owns more CPUs but receives less computing time overall. Virtualization software drivers may impact disk and network throughput. A relationship between network infrastructure, subnets, and data center distances may also affect performance.Cloud providers have long since recognized this. Accordingly, they include many options in their pricing calculations. The billing models explain price differences in machine configurations that appear similar at first glance. The layman can hardly guess what is behind the hidden differences between the abbreviations and type designations. The cloud operators provide competent advisors for selecting the optimum configurations.